Regression With Fusion Data#

The aim of this notebook is to regress the time until a disruption occurs given a current state in time.

In reality, our dataset is comprised of multiple shots, where each shot has individual time readings.

Shot Number |

Time Step |

Feature 1 |

Feature 2 |

|---|---|---|---|

1 |

\(t_0\) |

- |

- |

1 |

\(t_1\) |

- |

- |

1 |

\(t_2\) |

- |

- |

1 |

… |

- |

- |

1 |

\(t_m\) |

- |

- |

… |

|||

Shot Number |

Time Step |

Feature 1 |

Feature 2 |

—————– |

———————- |

—————– |

—————– |

N |

\(t_0\) |

- |

- |

N |

\(t_1\) |

- |

- |

N |

\(t_2\) |

- |

- |

N |

… |

- |

- |

N |

\(t_m\) |

- |

- |

In good approximation, we can treat individual readings in time as independent measurements and regress directly upon them.

You can think of this as a sort of state estimation:

Given state \(S_i\), independent of \(S_{j \neq i}\), we want to regress how long the stability of our fusion process will last.

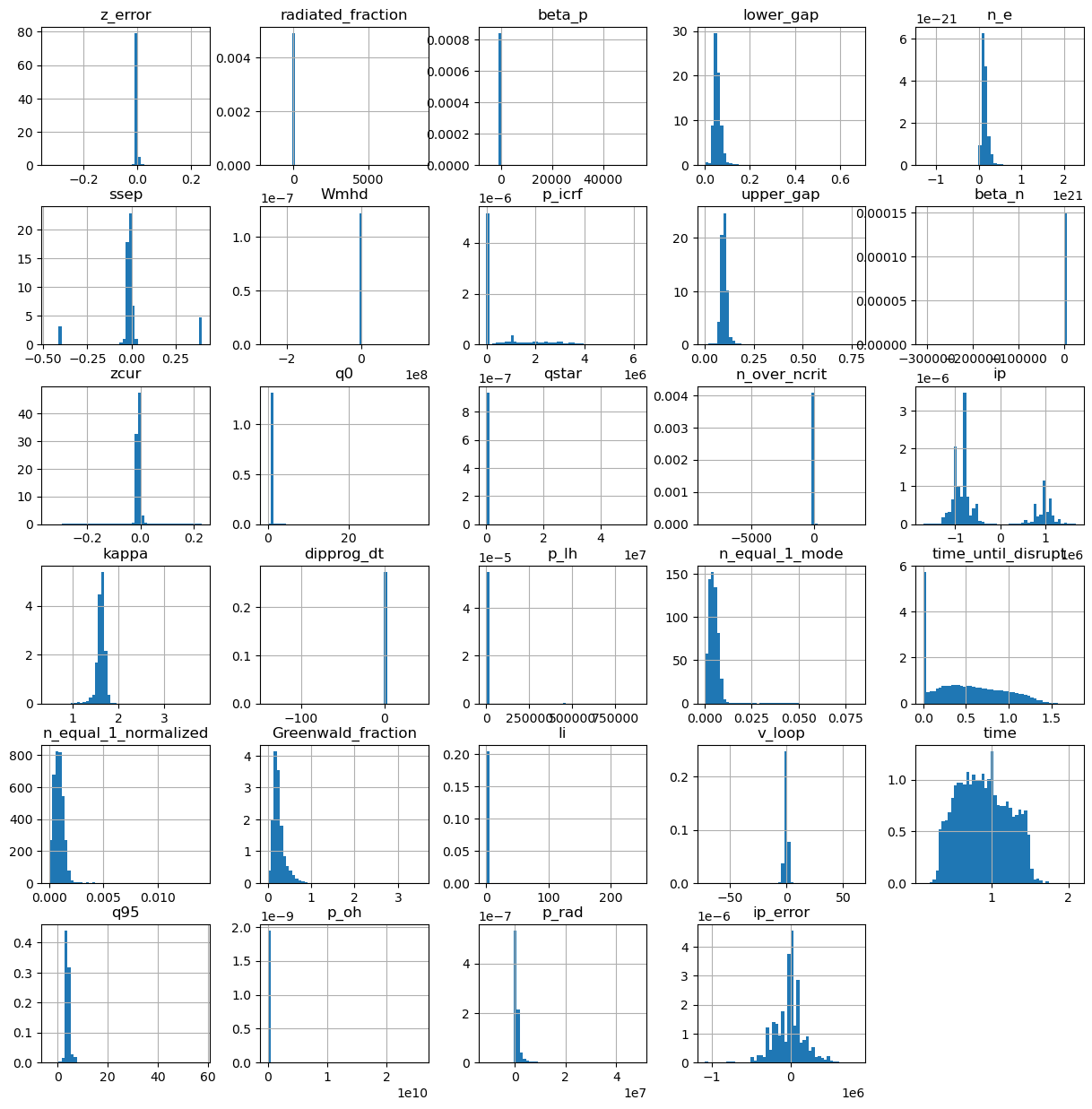

Lets load our data and take a look at the features. We also need to drop some features as these will bias the regression.

We will drop:

Disruptive - this is a binary label indicating whether or not a disruption has occured.

Shot - this is an indexing variable for individual shots.

import pandas as pd

import matplotlib.pyplot as plt

df = pd.read_csv("full_db-complete_classified_0.1s.csv",sep=',',index_col=None)

df_regression = df.drop(columns=['disruptive','shot']) # Drop disruptive - binary la

df_regression = df_regression[df.time_until_disrupt > 0]

df_regression = df_regression.sample(frac=1.0) # Random shuffle shots

df_regression.head()

| z_error | radiated_fraction | beta_p | lower_gap | n_e | ssep | Wmhd | p_icrf | upper_gap | beta_n | ... | time_until_disrupt | n_equal_1_normalized | Greenwald_fraction | li | v_loop | time | q95 | p_oh | p_rad | ip_error | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 148403 | -0.000308 | 0.348539 | 0.294560 | 0.049893 | 2.203347e+20 | -0.025936 | 21782.460938 | 3.840209e+01 | 0.093674 | 0.742089 | ... | 0.0160 | 0.001137 | 0.604698 | 1.259797 | -2.511963 | 1.0899 | 2.940394 | 1.125851e+06 | 392415.960065 | 271289.0625 |

| 169792 | 0.000219 | 0.018243 | 0.257212 | 0.078944 | 1.575422e+20 | -0.013643 | 44130.542969 | 3.537796e+06 | 0.103161 | 0.439488 | ... | 0.2849 | 0.001021 | 0.309502 | 1.414968 | -0.851196 | 1.4200 | 4.553412 | 4.030026e+05 | 71890.617371 | 104532.6250 |

| 275818 | 0.000992 | 0.415253 | 0.135165 | 0.044478 | 7.909377e+19 | -0.018565 | 23948.123047 | 3.787166e+01 | 0.097886 | 0.223583 | ... | 1.2241 | 0.000308 | 0.148950 | 1.505030 | -0.808014 | 0.5200 | 4.437774 | 8.496335e+05 | 352828.804445 | -13796.6875 |

| 44013 | 0.000420 | -0.329507 | 0.092925 | 0.052791 | 7.200679e+19 | 0.010766 | 26898.298828 | 1.013809e+02 | 0.087245 | 0.192169 | ... | 0.1745 | 0.000827 | 0.106209 | 1.382588 | 0.796661 | 0.6400 | 3.791784 | -1.451691e+06 | 478308.292191 | -234166.7500 |

| 22454 | 0.001223 | 0.350599 | 0.189295 | 0.055474 | 1.547137e+20 | -0.007201 | 53719.265625 | 7.274901e+05 | 0.108699 | 0.408583 | ... | 0.8579 | 0.000525 | 0.233198 | 1.368598 | -1.063354 | 0.6800 | 3.532992 | 1.178322e+06 | 668175.674777 | -209515.4375 |

5 rows × 29 columns

Lets visualize our data.

df_regression.hist(figsize=(15,15),bins=50,density=True)

array([[<AxesSubplot:title={'center':'z_error'}>,

<AxesSubplot:title={'center':'radiated_fraction'}>,

<AxesSubplot:title={'center':'beta_p'}>,

<AxesSubplot:title={'center':'lower_gap'}>,

<AxesSubplot:title={'center':'n_e'}>],

[<AxesSubplot:title={'center':'ssep'}>,

<AxesSubplot:title={'center':'Wmhd'}>,

<AxesSubplot:title={'center':'p_icrf'}>,

<AxesSubplot:title={'center':'upper_gap'}>,

<AxesSubplot:title={'center':'beta_n'}>],

[<AxesSubplot:title={'center':'zcur'}>,

<AxesSubplot:title={'center':'q0'}>,

<AxesSubplot:title={'center':'qstar'}>,

<AxesSubplot:title={'center':'n_over_ncrit'}>,

<AxesSubplot:title={'center':'ip'}>],

[<AxesSubplot:title={'center':'kappa'}>,

<AxesSubplot:title={'center':'dipprog_dt'}>,

<AxesSubplot:title={'center':'p_lh'}>,

<AxesSubplot:title={'center':'n_equal_1_mode'}>,

<AxesSubplot:title={'center':'time_until_disrupt'}>],

[<AxesSubplot:title={'center':'n_equal_1_normalized'}>,

<AxesSubplot:title={'center':'Greenwald_fraction'}>,

<AxesSubplot:title={'center':'li'}>,

<AxesSubplot:title={'center':'v_loop'}>,

<AxesSubplot:title={'center':'time'}>],

[<AxesSubplot:title={'center':'q95'}>,

<AxesSubplot:title={'center':'p_oh'}>,

<AxesSubplot:title={'center':'p_rad'}>,

<AxesSubplot:title={'center':'ip_error'}>, <AxesSubplot:>]],

dtype=object)

Preprocessing#

We will create a train test split for this dataset, using the traditional 70/15/15% split.

We are also going to add a flag for taking the logarithm of time.

from sklearn.preprocessing import MinMaxScaler

############# Important Flag ##############

log_time = False

###########################################

def create_train_test_split(dataset,log_time=False):

def return_x_y(dataframe,log_time=False):

x = dataframe.drop(columns=['time_until_disrupt']).to_numpy()

if log_time:

y = np.log(dataframe['time_until_disrupt'].values)

else:

y = dataframe['time_until_disrupt'].values

return x,y

Nt = int(0.7 * len(dataset))

train = dataset[:Nt]

test_val = dataset[Nt:]

Nv = int(0.5 *len(test_val))

val = test_val[:Nv]

test = test_val[Nv:]

print("Total dataset size: ",len(dataset))

print("Number of Training data points: ",len(train))

print("Number of Validation data points: ",len(val))

print("Number of Testing data points: ",len(test))

train_x,train_y = return_x_y(train,log_time)

val_x,val_y = return_x_y(val,log_time)

test_x,test_y = return_x_y(test,log_time)

return train_x,train_y,val_x,val_y,test_x,test_y

train_x,train_y,val_x,val_y,test_x,test_y = create_train_test_split(df_regression,log_time=log_time)

Total dataset size: 63501

Number of Training data points: 44450

Number of Validation data points: 9525

Number of Testing data points: 9526

Lets scale our data. Here we will use MinMax scaling on the interval (-1,1). You can experiment with other scaling such as StandardScalers (z-score normalization) if you wish.

feature_scaler = MinMaxScaler((-1,1))

feature_scaler.fit(df_regression.drop(columns=['time_until_disrupt']).to_numpy()) # Lets use global statistics for simplicity here.

x_train_scaled = feature_scaler.transform(train_x)

x_val_scaled = feature_scaler.transform(val_x)

x_test_scaled = feature_scaler.transform(test_x)

print("Features")

print("Training:",x_train_scaled.max(),x_train_scaled.min())

print("Validation:",x_val_scaled.max(),x_val_scaled.min())

print("Testing:",x_test_scaled.max(),x_test_scaled.min())

if log_time: # Declared above

target_scaler = MinMaxScaler((0,1))

target_scaler.fit(np.log(df_regression['time_until_disrupt'].values.reshape(-1,1))) # Using global statistics for simplicity here

y_train_scaled = target_scaler.transform(train_y.reshape(-1,1)).flatten()

y_val_scaled = target_scaler.transform(val_y.reshape(-1,1)).flatten()

y_test_scaled = target_scaler.transform(test_y.reshape(-1,1)).flatten()

else:

y_train_scaled = train_y.copy()

y_val_scaled = val_y.copy()

y_test_scaled = test_y.copy()

print(" ")

print("Targets")

print("Training:",y_train_scaled.max(),y_train_scaled.min())

print("Validation:",y_val_scaled.max(),y_val_scaled.min())

print("Testing:",y_test_scaled.max(),y_test_scaled.min())

Features

Training: 1.0000000000000004 -1.0000000000000002

Validation: 1.0 -1.0

Testing: 1.0000000000000002 -1.0

Targets

Training: 1.7724999487400055 0.0004999637603759

Validation: 1.7924999594688416 0.0001000165939331

Testing: 1.7524999380111694 0.0009999275207519

Model Definition#

We will use a simple Deep Neural Network (DNN), commonly referred to as a Multi-Layer Perceptron (MLP).

We are going to make this as explicit as possible for clarity. We could use nn.Sequential to package this more neatly if we so choose, and also be more dynamic in our model creation.

import torch

from torch import nn

from torch.nn import BatchNorm1d

class MLP(nn.Module):

def __init__(self,input_shape,output_shape):

super(MLP, self).__init__()

self.init_layer = nn.Linear(input_shape,64)

self.B0 = nn.BatchNorm1d(64)

self.L1 = nn.Linear(64,256)

self.B1 = nn.BatchNorm1d(256)

self.L2 = nn.Linear(256,512)

self.B2 = nn.BatchNorm1d(512)

self.L3 = nn.Linear(512,256)

self.B3 = nn.BatchNorm1d(256)

self.L4 = nn.Linear(256,128)

self.B4 = nn.BatchNorm1d(128)

self.output = nn.Linear(128,output_shape)

self.activation = nn.SELU()

self.output_activation = nn.ReLU()

def forward(self,x):

x = self.activation(self.B0(self.init_layer(x))) # SELU(BNorm(Linear(x)))

x = self.activation(self.B1(self.L1(x)))

x = self.activation(self.B2(self.L2(x)))

x = self.activation(self.B3(self.L3(x)))

x = self.activation(self.B4(self.L4(x)))

x = self.output_activation(self.output(x)).squeeze(1) # Time must be > 0, ReLU is good choice here.

return x

regressor = MLP(input_shape=x_train_scaled.shape[1],output_shape=1) # Singular output

print(regressor)

MLP(

(init_layer): Linear(in_features=28, out_features=64, bias=True)

(B0): BatchNorm1d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(L1): Linear(in_features=64, out_features=256, bias=True)

(B1): BatchNorm1d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(L2): Linear(in_features=256, out_features=512, bias=True)

(B2): BatchNorm1d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(L3): Linear(in_features=512, out_features=256, bias=True)

(B3): BatchNorm1d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(L4): Linear(in_features=256, out_features=128, bias=True)

(B4): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(output): Linear(in_features=128, out_features=1, bias=True)

(activation): SELU()

(output_activation): ReLU()

)

Lets see if we get output.

regressor(torch.tensor(x_train_scaled[:3]).float())

tensor([1.1473, 0.0000, 0.0000], grad_fn=<SqueezeBackward1>)

We are going to use built in pytorch functions for creating our data loading pipeline. Since we have simple tabular data, we can use TensorDataset(x,y).

Note that we are going to need to convert these numpy arrays to torch.tensor() objects first.

from torch.utils.data import TensorDataset, DataLoader

train_dataset = TensorDataset(torch.tensor(x_train_scaled),torch.tensor(y_train_scaled))

val_dataset = TensorDataset(torch.tensor(x_val_scaled),torch.tensor(y_val_scaled))

test_dataset = TensorDataset(torch.tensor(x_test_scaled),torch.tensor(y_test_scaled))

We can also build custom datasets, which you will see in next weeks lectures on normalizing flows. We will explain in more detail how those work then, but for now you should have knowledge of the basic method of getting data from these objects.

train_dataset.__getitem__(0)

(tensor([ 0.1039, -0.6623, 0.1553, -0.8241, -0.2839, 0.0161, -0.5669, -0.9937,

-0.7154, 0.4758, 0.1006, -0.9606, -0.5235, -0.7937, -0.3066, 0.1707,

0.5250, -0.9998, -0.8750, -0.7934, -0.3216, -0.0954, 0.0335, -0.0196,

-0.6106, -0.5459, -0.9736, 0.4466], dtype=torch.float64),

tensor(0.0160, dtype=torch.float64))

All pytorch datasets must have a getitem() function. This will be imporant when we want to pass these objects to a DataLoader(). Again, we will go into more detail on all of this next week. But lets get familiar with the basic usage.

We know that when we train deep learning models we will use batches of inputs to compute gradients. An efficient way to batch your data is to use the DataLoader() functions from pytorch. This will pass a series of random indices to your TensorDataset’s getitem() function and form batches.

train_loader = DataLoader(train_dataset,batch_size=10)

for i,data in enumerate(train_loader):

x = data[0]

y = data[1]

print("Batch {0}".format(i),"-> x shape: ",x.shape," y shape: ",y.shape)

break

Batch 0 -> x shape: torch.Size([10, 28]) y shape: torch.Size([10])

Now lets create a function to return dataloaders for the three datasets we have created.

# Create dataloaders to iterate.

# We take as input the three TensorDatasets along with the batch sizes we want to use.

def CreateLoaders(train_dataset,val_dataset,test_dataset,train_batch,val_batch,test_batch):

train_loader = DataLoader(train_dataset,

batch_size=train_batch,

shuffle=True)

val_loader = DataLoader(val_dataset,

batch_size=val_batch,

shuffle=False)

test_loader = DataLoader(test_dataset,

batch_size=val_batch,

shuffle=False)

return train_loader,val_loader,test_loader

train_loader,val_loader,test_loader = CreateLoaders(train_dataset,val_dataset,test_dataset,10,10,10)

Training Function#

We almost have everything we need to start training our model.

Datasets in a pytorch format.

DataLoaders for batch creation.

A training function - lets build this.

Lets write the training function in such a way that we can use it for any network.

There are two main components:

Training loop

Validation loop

We also need a clever way to store our parameters. Lets use a dictionary to do this.

Note that we are going to assume GPU usage here, so use your notebook on sciclone!

You can see the information regarding your GPU with the command below:

!nvidia-smi

Wed Jun 5 10:27:28 2024

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 551.68 Driver Version: 551.68 CUDA Version: 12.4 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name TCC/WDDM | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA GeForce RTX 4090 WDDM | 00000000:01:00.0 On | Off |

| 0% 34C P8 19W / 450W | 2156MiB / 24564MiB | 6% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| 0 N/A N/A 5640 C+G ...oogle\Chrome\Application\chrome.exe N/A |

| 0 N/A N/A 5756 C+G ...5n1h2txyewy\ShellExperienceHost.exe N/A |

| 0 N/A N/A 6936 C+G ...ems\FX\SubAgent\AlienFXSubAgent.exe N/A |

| 0 N/A N/A 8076 C+G C:\Windows\explorer.exe N/A |

| 0 N/A N/A 8160 C ...James\.conda\envs\ptorch\python.exe N/A |

| 0 N/A N/A 9568 C+G ...nt.CBS_cw5n1h2txyewy\SearchHost.exe N/A |

| 0 N/A N/A 9592 C+G ...2txyewy\StartMenuExperienceHost.exe N/A |

| 0 N/A N/A 12132 C+G ...t.LockApp_cw5n1h2txyewy\LockApp.exe N/A |

| 0 N/A N/A 17240 C+G ...Programs\Microsoft VS Code\Code.exe N/A |

| 0 N/A N/A 23292 C+G ...__8wekyb3d8bbwe\WindowsTerminal.exe N/A |

| 0 N/A N/A 31464 C+G ...siveControlPanel\SystemSettings.exe N/A |

| 0 N/A N/A 31864 C+G ...ekyb3d8bbwe\PhoneExperienceHost.exe N/A |

| 0 N/A N/A 33840 C+G ...on\125.0.2535.85\msedgewebview2.exe N/A |

| 0 N/A N/A 37792 C+G ...wekyb3d8bbwe\XboxGameBarWidgets.exe N/A |

| 0 N/A N/A 42028 C+G ...crosoft\Edge\Application\msedge.exe N/A |

+-----------------------------------------------------------------------------------------+

If you don’t see an output with the above command please let me know and we will sort it.

config = {"seed":8,

"name": "MyRegressionModel",

"run_val":1,

"model": {

"input_shape":28,

"output_shape": 1,

},

"optimizer": {

"lr": 7e-4,

},

"num_epochs": 100,

"dataloader": {

"train": {

"batch_size": 64

},

"val": {

"batch_size": 64

},

"test": {

"batch_size": 64

},

},

"output": {

"dir":"./"

}

}

Below is our training function. It will operate as follows:

We set seeds for commonly used packages for reproducability.

Create a directory with name corresponding to the name field in the above dictionary.

We create our dataloaders - we only need train/val, but test will be created anyways and not used.

We create an instance of our MLP (funciton defined above)

We create an instance of an optimizer - Adam.

Define a learning rate scheduler - CosineAnnealing. We will see what this looks like graphically.

Define our loss function - SmoothL1Loss - https://pytorch.org/docs/stable/generated/torch.nn.SmoothL1Loss.html

For each epoch iterate over all batches in the training loader and train.

At the end of each epoch, iterate over the validation loader - DO NOT apply gradients.

import os

import json

import random

import pkbar

import torch.optim as optim

from torch.optim import lr_scheduler

import torch.nn as nn

import datetime

import shutil

import time

def trainer(config,train_dataset,val_dataset,test_dataset):

# Setup random seed

torch.manual_seed(config['seed'])

np.random.seed(config['seed'])

random.seed(config['seed'])

torch.cuda.manual_seed(config['seed'])

# Create experiment name

exp_name = config['name']

print(exp_name)

# Create directory structure

output_folder = config['output']['dir']

output_path = os.path.join(output_folder,exp_name)

if os.path.exists(output_path):

timestamp = time.time()

dt_object = datetime.datetime.fromtimestamp(timestamp)

formatted_time = dt_object.strftime('%H_%M_%S')

output_path = output_path +"_"+ str(formatted_time)

os.mkdir(output_path)

with open(os.path.join(output_path,'config.json'),'w') as outfile:

json.dump(config, outfile)

# Load the dataset

print('Creating Loaders.')

train_batch_size = config['dataloader']['train']['batch_size']

val_batch_size = config['dataloader']['val']['batch_size']

test_batch_size = config['dataloader']['val']['batch_size']

train_loader,val_loader,test_loader = CreateLoaders(train_dataset,val_dataset,test_dataset,train_batch_size,val_batch_size,test_batch_size)

history = {'train_loss':[],'val_loss':[],'lr':[]}

print("Training Size: {0}".format(len(train_loader.dataset)))

print("Validation Size: {0}".format(len(val_loader.dataset)))

# Create the model

input_shape = config['model']['input_shape']

output_shape = config['model']['output_shape']

net = MLP(input_shape=input_shape,output_shape=output_shape)

t_params = sum(p.numel() for p in net.parameters())

print("Network Parameters: ",t_params)

device = torch.device('cuda')

net.to('cuda')

# Optimizer

num_epochs=int(config['num_epochs'])

lr = float(config['optimizer']['lr'])

optimizer = optim.Adam(list(filter(lambda p: p.requires_grad, net.parameters())), lr=lr)

num_steps = len(train_loader) * num_epochs

scheduler = torch.optim.lr_scheduler.CosineAnnealingLR(optimizer=optimizer, T_max=num_steps, last_epoch=-1,

eta_min=0)

startEpoch = 0

global_step = 0

print('=========== Optimizer ==================:')

print(' LR:', lr)

print(' num_epochs:', num_epochs)

print('')

# Loss Function

loss_function = nn.SmoothL1Loss()

for epoch in range(startEpoch,num_epochs):

kbar = pkbar.Kbar(target=len(train_loader), epoch=epoch, num_epochs=num_epochs, width=20, always_stateful=False)

net.train()

running_loss = 0.0

for i, data in enumerate(train_loader):

input = data[0].to('cuda').float()

y = data[1].to('cuda').float()

optimizer.zero_grad()

with torch.set_grad_enabled(True):

y_hat = net(input)

loss = loss_function(y,y_hat)

loss.backward()

#torch.nn.utils.clip_grad_norm_(net.parameters(), max_norm=0.5,error_if_nonfinite=True)

optimizer.step()

scheduler.step()

running_loss += loss.item() * input.shape[0]

kbar.update(i, values=[("loss", loss.item())])

global_step += 1

history['train_loss'].append(running_loss / len(train_loader.dataset))

history['lr'].append(scheduler.get_last_lr()[0])

######################

## validation phase ##

######################

if bool(config['run_val']):

net.eval()

val_loss = 0.0

with torch.no_grad():

for i, data in enumerate(val_loader):

input = data[0].to('cuda').float()

y = data[1].to('cuda').float()

y_hat = net(input)

loss = loss_function(y,y_hat)

val_loss += loss

val_loss = val_loss.cpu().numpy() / len(val_loader)

history['val_loss'].append(val_loss)

kbar.add(1, values=[("val_loss", val_loss.item())])

name_output_file = config['name']+'_epoch{:02d}_val_loss_{:.6f}.pth'.format(epoch, val_loss)

else:

kbar.add(1,values=[('val_loss',0.)])

name_output_file = config['name']+'_epoch{:02d}_train_loss_{:.6f}.pth'.format(epoch, running_loss / len(train_loader.dataset))

filename = os.path.join(output_path, name_output_file)

checkpoint={}

checkpoint['net_state_dict'] = net.state_dict()

checkpoint['optimizer'] = optimizer.state_dict()

checkpoint['scheduler'] = scheduler.state_dict()

checkpoint['epoch'] = epoch

checkpoint['history'] = history

checkpoint['global_step'] = global_step

torch.save(checkpoint,filename)

print('')

trainer(config,train_dataset,val_dataset,test_dataset)

MyRegressionModel

Creating Loaders.

Training Size: 44450

Validation Size: 9525

Network Parameters: 316865

=========== Optimizer ==================:

LR: 0.0007

num_epochs: 100

Epoch: 1/100

695/695 [====================] - 5s 7ms/step - loss: 0.0512 - val_loss: 0.0361

Epoch: 2/100

695/695 [====================] - 8s 11ms/step - loss: 0.0374 - val_loss: 0.0366

Epoch: 3/100

695/695 [====================] - 8s 11ms/step - loss: 0.0353 - val_loss: 0.0324

Epoch: 4/100

695/695 [====================] - 8s 11ms/step - loss: 0.0336 - val_loss: 0.0309

Epoch: 5/100

695/695 [====================] - 8s 11ms/step - loss: 0.0318 - val_loss: 0.0278

Epoch: 6/100

695/695 [====================] - 8s 11ms/step - loss: 0.0311 - val_loss: 0.0306

Epoch: 7/100

695/695 [====================] - 8s 11ms/step - loss: 0.0296 - val_loss: 0.0296

Epoch: 8/100

695/695 [====================] - 7s 11ms/step - loss: 0.0295 - val_loss: 0.0273

Epoch: 9/100

695/695 [====================] - 8s 11ms/step - loss: 0.0284 - val_loss: 0.0298

Epoch: 10/100

695/695 [====================] - 8s 11ms/step - loss: 0.0276 - val_loss: 0.0254

Epoch: 11/100

695/695 [====================] - 8s 11ms/step - loss: 0.0269 - val_loss: 0.0241

Epoch: 12/100

695/695 [====================] - 8s 11ms/step - loss: 0.0263 - val_loss: 0.0258

Epoch: 13/100

695/695 [====================] - 8s 11ms/step - loss: 0.0255 - val_loss: 0.0235

Epoch: 14/100

695/695 [====================] - 8s 11ms/step - loss: 0.0248 - val_loss: 0.0254

Epoch: 15/100

695/695 [====================] - 8s 11ms/step - loss: 0.0243 - val_loss: 0.0220

Epoch: 16/100

695/695 [====================] - 7s 11ms/step - loss: 0.0240 - val_loss: 0.0247

Epoch: 17/100

695/695 [====================] - 8s 11ms/step - loss: 0.0234 - val_loss: 0.0240

Epoch: 18/100

695/695 [====================] - 8s 11ms/step - loss: 0.0230 - val_loss: 0.0222

Epoch: 19/100

695/695 [====================] - 8s 11ms/step - loss: 0.0226 - val_loss: 0.0206

Epoch: 20/100

695/695 [====================] - 8s 11ms/step - loss: 0.0219 - val_loss: 0.0214

Epoch: 21/100

695/695 [====================] - 8s 11ms/step - loss: 0.0216 - val_loss: 0.0211

Epoch: 22/100

695/695 [====================] - 7s 11ms/step - loss: 0.0214 - val_loss: 0.0203

Epoch: 23/100

695/695 [====================] - 8s 11ms/step - loss: 0.0208 - val_loss: 0.0213

Epoch: 24/100

695/695 [====================] - 8s 11ms/step - loss: 0.0204 - val_loss: 0.0194

Epoch: 25/100

695/695 [====================] - 8s 11ms/step - loss: 0.0200 - val_loss: 0.0212

Epoch: 26/100

695/695 [====================] - 7s 11ms/step - loss: 0.0196 - val_loss: 0.0180

Epoch: 27/100

695/695 [====================] - 8s 11ms/step - loss: 0.0193 - val_loss: 0.0208

Epoch: 28/100

695/695 [====================] - 7s 11ms/step - loss: 0.0188 - val_loss: 0.0198

Epoch: 29/100

695/695 [====================] - 8s 11ms/step - loss: 0.0190 - val_loss: 0.0201

Epoch: 30/100

695/695 [====================] - 8s 11ms/step - loss: 0.0185 - val_loss: 0.0169

Epoch: 31/100

695/695 [====================] - 8s 11ms/step - loss: 0.0182 - val_loss: 0.0168

Epoch: 32/100

695/695 [====================] - 7s 11ms/step - loss: 0.0179 - val_loss: 0.0175

Epoch: 33/100

695/695 [====================] - 8s 11ms/step - loss: 0.0179 - val_loss: 0.0169

Epoch: 34/100

695/695 [====================] - 8s 11ms/step - loss: 0.0175 - val_loss: 0.0162

Epoch: 35/100

695/695 [====================] - 8s 11ms/step - loss: 0.0173 - val_loss: 0.0149

Epoch: 36/100

695/695 [====================] - 8s 11ms/step - loss: 0.0169 - val_loss: 0.0162

Epoch: 37/100

695/695 [====================] - 8s 11ms/step - loss: 0.0168 - val_loss: 0.0168

Epoch: 38/100

695/695 [====================] - 8s 11ms/step - loss: 0.0166 - val_loss: 0.0163

Epoch: 39/100

695/695 [====================] - 7s 11ms/step - loss: 0.0165 - val_loss: 0.0148

Epoch: 40/100

695/695 [====================] - 8s 11ms/step - loss: 0.0161 - val_loss: 0.0154

Epoch: 41/100

695/695 [====================] - 8s 11ms/step - loss: 0.0159 - val_loss: 0.0148

Epoch: 42/100

695/695 [====================] - 7s 11ms/step - loss: 0.0157 - val_loss: 0.0142

Epoch: 43/100

695/695 [====================] - 8s 11ms/step - loss: 0.0156 - val_loss: 0.0147

Epoch: 44/100

695/695 [====================] - 8s 11ms/step - loss: 0.0151 - val_loss: 0.0144

Epoch: 45/100

695/695 [====================] - 8s 11ms/step - loss: 0.0151 - val_loss: 0.0140

Epoch: 46/100

695/695 [====================] - 8s 11ms/step - loss: 0.0149 - val_loss: 0.0147

Epoch: 47/100

695/695 [====================] - 8s 11ms/step - loss: 0.0148 - val_loss: 0.0141

Epoch: 48/100

695/695 [====================] - 8s 11ms/step - loss: 0.0145 - val_loss: 0.0142

Epoch: 49/100

695/695 [====================] - 8s 11ms/step - loss: 0.0143 - val_loss: 0.0130

Epoch: 50/100

695/695 [====================] - 8s 11ms/step - loss: 0.0142 - val_loss: 0.0139

Epoch: 51/100

695/695 [====================] - 8s 11ms/step - loss: 0.0141 - val_loss: 0.0134

Epoch: 52/100

695/695 [====================] - 8s 11ms/step - loss: 0.0139 - val_loss: 0.0141

Epoch: 53/100

695/695 [====================] - 8s 11ms/step - loss: 0.0139 - val_loss: 0.0133

Epoch: 54/100

695/695 [====================] - 8s 11ms/step - loss: 0.0136 - val_loss: 0.0133

Epoch: 55/100

695/695 [====================] - 8s 11ms/step - loss: 0.0133 - val_loss: 0.0120

Epoch: 56/100

695/695 [====================] - 8s 11ms/step - loss: 0.0131 - val_loss: 0.0122

Epoch: 57/100

695/695 [====================] - 8s 11ms/step - loss: 0.0132 - val_loss: 0.0128

Epoch: 58/100

695/695 [====================] - 8s 11ms/step - loss: 0.0130 - val_loss: 0.0124

Epoch: 59/100

695/695 [====================] - 7s 11ms/step - loss: 0.0128 - val_loss: 0.0121

Epoch: 60/100

695/695 [====================] - 8s 11ms/step - loss: 0.0125 - val_loss: 0.0122

Epoch: 61/100

695/695 [====================] - 8s 11ms/step - loss: 0.0126 - val_loss: 0.0119

Epoch: 62/100

695/695 [====================] - 7s 11ms/step - loss: 0.0125 - val_loss: 0.0117

Epoch: 63/100

695/695 [====================] - 8s 11ms/step - loss: 0.0122 - val_loss: 0.0119

Epoch: 64/100

695/695 [====================] - 8s 11ms/step - loss: 0.0120 - val_loss: 0.0119

Epoch: 65/100

695/695 [====================] - 8s 11ms/step - loss: 0.0123 - val_loss: 0.0112

Epoch: 66/100

695/695 [====================] - 8s 11ms/step - loss: 0.0120 - val_loss: 0.0113

Epoch: 67/100

695/695 [====================] - 8s 11ms/step - loss: 0.0118 - val_loss: 0.0121

Epoch: 68/100

695/695 [====================] - 8s 11ms/step - loss: 0.0116 - val_loss: 0.0108

Epoch: 69/100

695/695 [====================] - 8s 11ms/step - loss: 0.0115 - val_loss: 0.0115

Epoch: 70/100

695/695 [====================] - 8s 11ms/step - loss: 0.0115 - val_loss: 0.0117

Epoch: 71/100

695/695 [====================] - 8s 11ms/step - loss: 0.0114 - val_loss: 0.0111

Epoch: 72/100

695/695 [====================] - 8s 11ms/step - loss: 0.0112 - val_loss: 0.0104

Epoch: 73/100

695/695 [====================] - 8s 11ms/step - loss: 0.0112 - val_loss: 0.0106

Epoch: 74/100

695/695 [====================] - 7s 11ms/step - loss: 0.0110 - val_loss: 0.0108

Epoch: 75/100

695/695 [====================] - 8s 11ms/step - loss: 0.0112 - val_loss: 0.0105

Epoch: 76/100

695/695 [====================] - 8s 11ms/step - loss: 0.0109 - val_loss: 0.0104

Epoch: 77/100

695/695 [====================] - 8s 11ms/step - loss: 0.0108 - val_loss: 0.0109

Epoch: 78/100

695/695 [====================] - 8s 11ms/step - loss: 0.0108 - val_loss: 0.0104

Epoch: 79/100

695/695 [====================] - 8s 11ms/step - loss: 0.0107 - val_loss: 0.0104

Epoch: 80/100

695/695 [====================] - 8s 11ms/step - loss: 0.0106 - val_loss: 0.0104

Epoch: 81/100

695/695 [====================] - 8s 11ms/step - loss: 0.0104 - val_loss: 0.0102

Epoch: 82/100

695/695 [====================] - 8s 11ms/step - loss: 0.0105 - val_loss: 0.0100

Epoch: 83/100

695/695 [====================] - 8s 11ms/step - loss: 0.0104 - val_loss: 0.0098

Epoch: 84/100

695/695 [====================] - 8s 11ms/step - loss: 0.0102 - val_loss: 0.0100

Epoch: 85/100

695/695 [====================] - 8s 11ms/step - loss: 0.0103 - val_loss: 0.0099

Epoch: 86/100

695/695 [====================] - 8s 11ms/step - loss: 0.0101 - val_loss: 0.0099

Epoch: 87/100

695/695 [====================] - 7s 11ms/step - loss: 0.0102 - val_loss: 0.0099

Epoch: 88/100

695/695 [====================] - 8s 11ms/step - loss: 0.0101 - val_loss: 0.0100

Epoch: 89/100

695/695 [====================] - 8s 11ms/step - loss: 0.0101 - val_loss: 0.0099

Epoch: 90/100

695/695 [====================] - 7s 11ms/step - loss: 0.0100 - val_loss: 0.0099

Epoch: 91/100

695/695 [====================] - 8s 11ms/step - loss: 0.0099 - val_loss: 0.0103

Epoch: 92/100

695/695 [====================] - 8s 11ms/step - loss: 0.0101 - val_loss: 0.0098

Epoch: 93/100

695/695 [====================] - 7s 11ms/step - loss: 0.0099 - val_loss: 0.0108

Epoch: 94/100

695/695 [====================] - 8s 11ms/step - loss: 0.0100 - val_loss: 0.0104

Epoch: 95/100

695/695 [====================] - 8s 11ms/step - loss: 0.0098 - val_loss: 0.0109

Epoch: 96/100

695/695 [====================] - 8s 11ms/step - loss: 0.0099 - val_loss: 0.0098

Epoch: 97/100

695/695 [====================] - 8s 11ms/step - loss: 0.0099 - val_loss: 0.0097

Epoch: 98/100

695/695 [====================] - 8s 11ms/step - loss: 0.0098 - val_loss: 0.0095

Epoch: 99/100

695/695 [====================] - 8s 11ms/step - loss: 0.0098 - val_loss: 0.0095

Epoch: 100/100

695/695 [====================] - 8s 11ms/step - loss: 0.0098 - val_loss: 0.0096

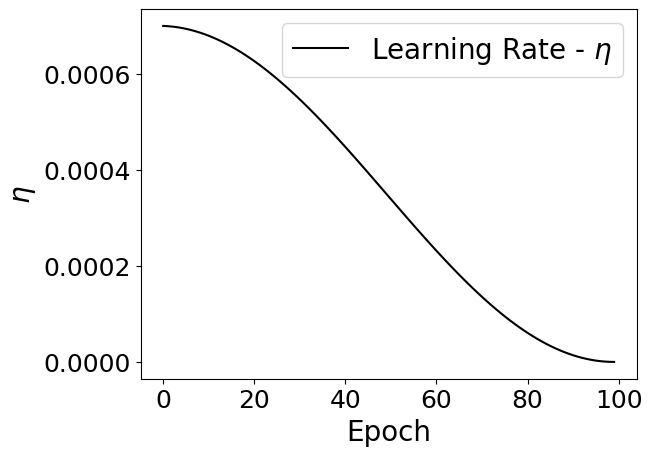

Lets take a look at what the learning rate scheduler has done for us:

dicte = torch.load(os.path.join(config['name'],os.listdir("MyRegressionModel")[-1]))

print(dicte.keys())

dict_keys(['net_state_dict', 'optimizer', 'scheduler', 'epoch', 'history', 'global_step'])

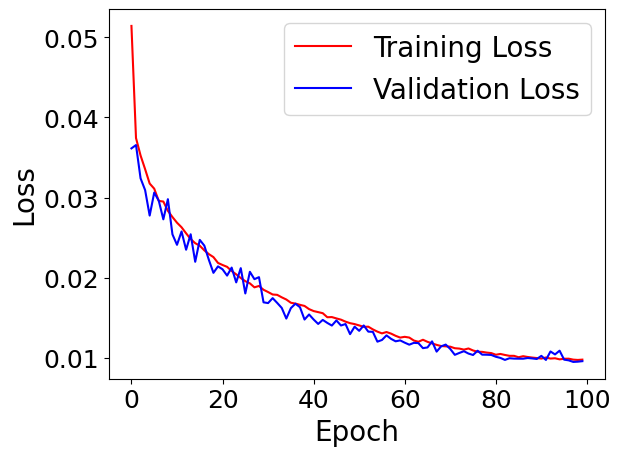

Within each .pth file, we have stored the weights at a specific epoch, along with a variety of other useful information. First lets plot the training and validation losses, along with the learning rate.

train_loss = dicte['history']['train_loss']

val_loss = dicte['history']['val_loss']

learning_rate = dicte['history']['lr']

plt.plot(train_loss,'r-',label="Training Loss")

plt.plot(val_loss,'b-',label='Validation Loss')

plt.legend(fontsize=20)

plt.xlabel('Epoch',fontsize=20)

plt.ylabel('Loss',fontsize=20)

plt.tick_params(axis='both', labelsize=18)

plt.show()

plt.plot(learning_rate,'k-',label=r"Learning Rate - $\eta$")

plt.legend(fontsize=20)

plt.xlabel('Epoch',fontsize=20)

plt.ylabel(r'$\eta$',fontsize=20)

plt.tick_params(axis='both', labelsize=18)

plt.show()

Notice how we have decreased the learning rate at each step corresponding to a cosine distribution. Specifically, we follow the formula below:

\(\eta_{t} = \eta_{\text{min}} + \frac{1}{2} (\eta_{\text{max}} - \eta_{\text{min}}) \left(1 + \cos\left(\frac{T_{\text{cur}}}{T_{\text{max}}} \pi\right)\right)\)

\(\eta_{t+1} = \eta_{t} + \frac{1}{2} (\eta_{\text{max}} - \eta_{\text{min}}) \left(1 - \cos\left(\frac{T_{\text{cur}}}{T_{\text{max}}} \pi\right)\right)\)

where:

\(\eta_{t}\) is the learning rate at time (t),

\(\eta_{t+1}\) is the learning rate at time (t+1),

\(\eta_{\text{min}}\) is the minimum learning rate,

\(\eta_{\text{max}}\) is the maximum learning rate,

\(T_{\text{cur}}\) is the current time step,

\(T_{\text{max}}\) is the maximum time step.

with \(T_{\text{cur}} \neq (2k+1)T_{\text{max}}\),

and \( T_{\text{cur}} = (2k+1)T_{\text{max}}. \)

Testing the Performance#

We have trained our model, and at each epoch we have saved the weights of the model to .pth file. This will be located in a folder corresponding to the “name” field of the config dictionary.

Lets load in the model, and look at some metrics.

input_shape = config['model']['input_shape']

output_shape = config['model']['output_shape']

net = MLP(input_shape=input_shape,output_shape=output_shape)

t_params = sum(p.numel() for p in net.parameters())

print("Network Parameters: ",t_params)

device = torch.device('cuda')

net.to('cuda')

dicte = torch.load(os.path.join(config['name'],os.listdir("MyRegressionModel")[-1]))

net.load_state_dict(dicte["net_state_dict"])

Network Parameters: 316865

<All keys matched successfully>

test_loader = DataLoader(test_dataset,batch_size=config['dataloader']['test']['batch_size'])

net.eval() # Eval mode

predictions = []

y_true = []

kbar = pkbar.Kbar(target=len(test_loader), width=20, always_stateful=False)

for i,data in enumerate(test_loader):

x = data[0].to('cuda').float()

with torch.set_grad_enabled(False): # Same as with torch.no_grad():

y_hat = net(x).detach().cpu().numpy()

if log_time: # Decalared above

y_hat = np.exp(target_scaler.inverse_transform(y_hat.reshape(-1,1))).flatten()

y_true.append(np.exp(target_scaler.inverse_transform(data[1].numpy().reshape(-1,1))).flatten())

else:

y_true.append(data[1].numpy())

predictions.append(y_hat)

kbar.update(i)

predictions = np.concatenate(predictions)

y_true = np.concatenate(y_true)

127/149 [================>...] - ETA: 0s

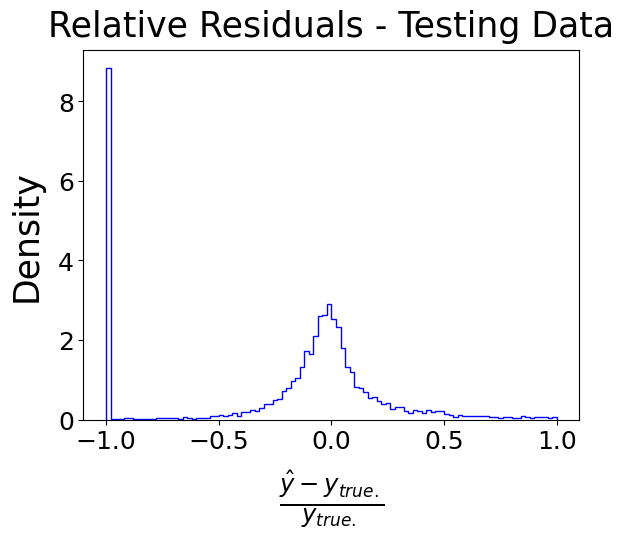

Lets look at the relative residuals.

relative_residuals = (predictions - y_true) / (y_true + 1e-7)

plt.hist(relative_residuals,density=True,color='blue',histtype='step',label='Relative Residuals',range=[-1,1],bins=100)

plt.title('Relative Residuals - Testing Data',fontsize=25,pad=10)

plt.xlabel(r'$\frac{\hat{y} - y_{true.}}{y_{true.}}$',fontsize=25,labelpad=10)

plt.ylabel('Density',fontsize=25,labelpad=10)

plt.tick_params(axis='both', labelsize=18)

Uh oh. We have a large spike at -1. Can anyone hypothesize why this could be the case?

Can you look into the predicted and true values to get some intuition?